The Future of Computing Depends on Making It Reversible

By

It’s time to embrace reversible computing, which could offer dramatic improvements in energy efficiency

For more than 50 years, computers have made steady and dramatic improvements, all thanks to Moore’s Law—the exponential increase over time in the number of transistors that can be fabricated on an integrated circuit of a given size. Moore’s Law owed its success to the fact that as transistors were made smaller, they became simultaneously cheaper, faster, and more energy efficient. The payoff from this win-win-win scenario enabled reinvestment in semiconductor fabrication technology that could make even smaller, more densely packed transistors. And so this virtuous circle continued, decade after decade.

Now though, experts in industry, academia, and government laboratories anticipate that semiconductor miniaturization won’t continue much longer—maybe 5 or 10 years. Making transistors smaller no longer yields the improvements it used to. The physical characteristics of small transistors caused clock speeds to stagnate more than a decade ago, which drove the industry to start building chips with multiple cores. But even multicore architectures must contend with increasing amounts of “dark silicon,” areas of the chip that must be powered off to avoid overheating.

Heroic efforts are being made within the semiconductor industry to try to keep miniaturization going. But no amount of investment can change the laws of physics. At some point—now not very far away—a new computer that simply has smaller transistors will no longer be any cheaper, faster, or more energy efficient than its predecessors. At that point, the progress of conventional semiconductor technology will stop.

What about unconventional semiconductor technology, such as carbon-nanotube transistors, tunneling transistors, or spintronic devices? Unfortunately, many of the same fundamental physical barriers that prevent today’s complementary metal-oxide-semiconductor (CMOS) technology from advancing very much further will still apply, in a modified form, to those devices. We might be able to eke out a few more years of progress, but if we want to keep moving forward decades down the line, new devices are not enough: We’ll also have to rethink our most fundamental notions of computation.

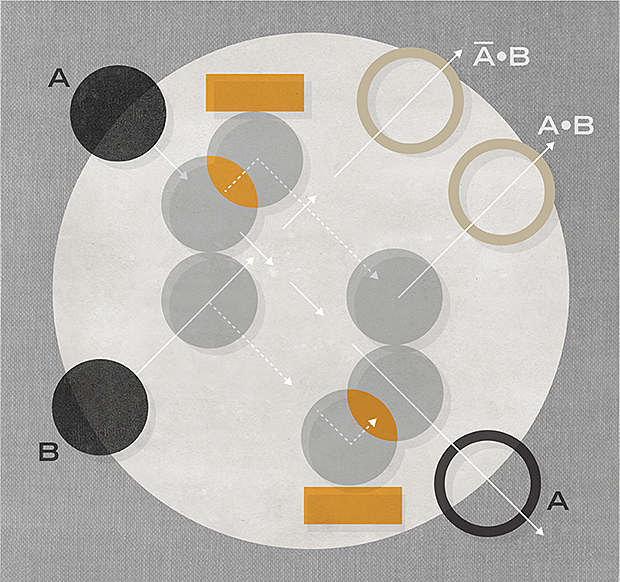

Let me explain. For the entire history of computing, our calculating machines have operated in a way that causes the intentional loss of some information (it’s destructively overwritten) in the process of performing computations. But for several decades now, we have known that it’s possible in principle to carry out any desired computation without losing information—that is, in such a way that the computation could always be reversed to recover its earlier state. This idea of reversible computing goes to the very heart of thermodynamics and information theory, and indeed it is the only possible way within the laws of physics that we might be able to keep improving the cost and energy efficiency of general-purpose computing far into the future.

In the past, reversible computing never received much attention. That’s because it’s very hard to implement, and there was little reason to pursue this great challenge so long as conventional technology kept advancing. But with the end now in sight, it’s time for the world’s best physics and engineering minds to commence an all-out effort to bring reversible computing to practical fruition.

The history of reversible computing begins with physicist Rolf Landauer of IBM, who published a paper in 1961 titled “Irreversibility and Heat Generation in the Computing Process.” In it, Landauer argued that the logically irreversible character of conventional computational operations has direct implications for the thermodynamic behavior of a device that is carrying out those operations.

Landauer’s reasoning can be understood by observing that the most fundamental laws of physics are reversible, meaning that if you had complete knowledge of the state of a closed system at some time, you could always—at least in principle—run the laws of physics in reverse and determine the system’s exact state at any previous time.

To better see that, consider a game of billiards—an ideal one with no friction. If you were to make a movie of the balls bouncing off one another and the bumpers, the movie would look normal whether you ran it backward or forward: The collision physics would be the same, and you could work out the future configuration of the balls from their past configuration or vice versa equally easily.

The same fundamental reversibility holds for quantum-scale physics. As a consequence, you can’t have a situation in which two different detailed states of any physical system evolve into the exact same state at some later time, because that would make it impossible to determine the earlier state from the later one. In other words, at the lowest level in physics, information cannot be destroyed.[READ MORE]