See Straight Through Walls by Augmenting Your Eyeballs With Drones

A drone combined with a HoloLens can simulate X-ray vision while offering easy and intuitive control

Robots make fantastic remote-sensing systems, ideal for sending in to disaster areas or for search-and-rescue. Drones in particular can move rapidly over large areas or through structures, identifying damage or looking for survivors by sending a video feed from their on-board cameras to a remote operator. While the data that drones provide can be invaluable, managing them can be quite difficult, especially once they get beyond line-of-sight.

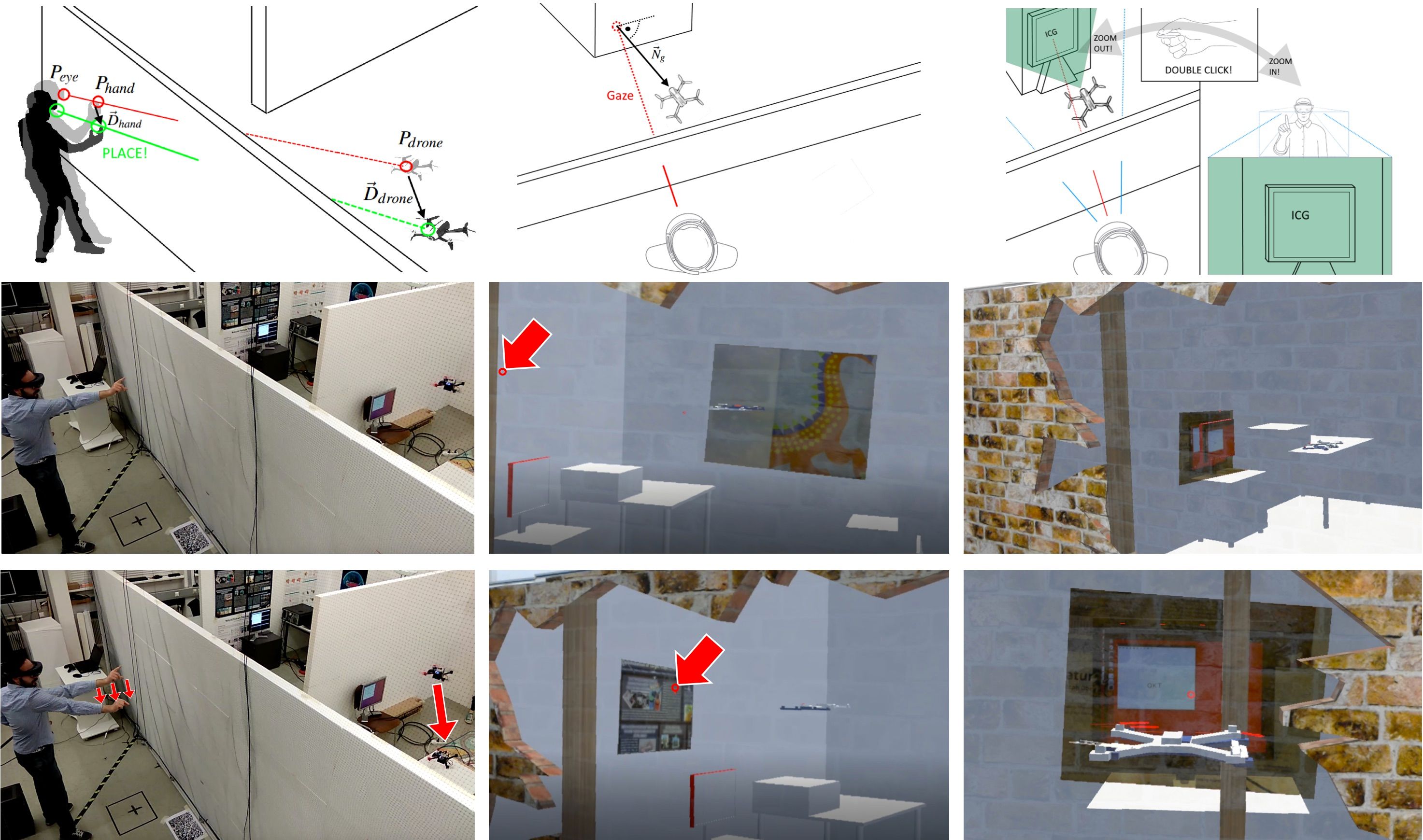

Researchers from Graz University of Technology, in Styria, Austria, led by Okan Erat, want to change the way we interface with drones, using augmented reality to turn them from complicated flying robots into remote cameras that an untrained user can easily control. Through a HoloLens—Microsoft’s mixed reality head-mounted display—a drone can enable a sort of X-ray vision, allowing you to see straight through walls and making controlling the drone as easy as grabbing a virtual drone and putting it exactly where you want it to be.

HoloLens works by projecting images into your field of vision. It carefully tracks where your head is pointing, and it can overlay a video feed from a drone on the other side of an opaque wall on top of what you’re looking at to make it appear as though the wall is transparent. Depending on what kind of camera the drone is equipped with, it can only see a limited field of view, so if you turn your head to look in a different direction (or move around), the drone will reposition itself to give you the view that you want.

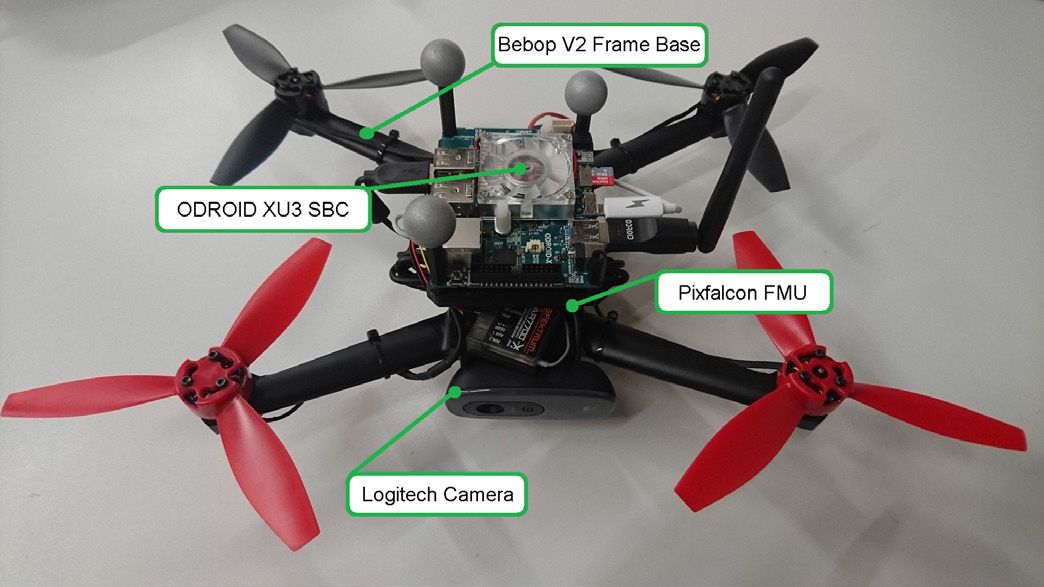

While the drone moves autonomously for most of the looking around, it’s easy to reposition it manually, again taking advantage of the augmented reality of the system. The location of the drone is visible in the HoloLens as a little model, and you simply reach your hand out, grab that model, and move it to where you want it to be. For the purposes of this demo, the drone relies on an OptiTrack external localization system to do its path planning, but SLAM on drones is something that’s been done before.

To test how much better this system is, the researchers set up a study where participants were asked to do remote tasks like reading text on a monitor through the drone’s cameras or positioning the drone in a specific spot. They either did this through the augmented reality system, or a more traditional remote controller along with a first-person video stream from the drone. Participants universally preferred the augmented reality view, and performed the study tasks twice as fast—people said that their control over the drone was more precise and more immersive (“cool, natural, and accurate”) while the augmented reality system had them feeling like they were “inside the scene.”

The next step here is to reduce the reliance that the system presently has on external help, like tracking and environmental modeling, since those are the biggest restrictions to real-world usefulness at the moment. But these are solvable problems, and as drones themselves get better and cheaper (and AR/VR systems do as well), the usefulness of a system like this will hopefully mean that we might actually get to try one at some point. Because we’re lousy at flying drones—and we’ve also always wanted X-ray vision. [READ MORE]

Comments :